Introduction

In the first part of this CTI focused blog posts series, we introduced the Intelligence Production Cycle and proposed a functional & technical architecture for a Cyber Threat Intelligence Platform integrated into and supporting both SOC and Incident Response (IR) operations.

This second part will focus on how Cyber Threat Intelligence can contribute and support the SOC’s Threat Hunting and Detection Engineering activities through a targeted Threat Actor Intelligence activity.

Reminder: Cyber Threat Intelligence

As we saw previously, Threat Intelligence production process can be represented as a 5-steps cycling process:

- Planning & Direction: produced intelligence requirements

- Collection: raw data gathering

- Processing & Exploitation: synthesis and standardization of raw data

- Analysis & Production: intelligence production from standardized data

- Dissemination: delivery of produced intelligenc

Threat Hunting & Detection Engineering

Detection engineering and Threat Hunting can both be considered as crucial activities in modern SOC operations. Although their importance is widely accepted, the associated definition and inherent activities often overlaps. The definitions provided by Kostas in his article “Threat Hunting Series: Detection Engineering VS Threat Hunting” highlights the difference between those two concepts:

Threat hunting is a proactive practice of looking for evidence of adversarial activity that conventional security systems may miss. It entails actively searching for signs of malicious behavior and abnormalities in a network or individual hosts. Detection engineering, on the other hand, is the process of developing and maintaining detection methods to identify malicious activity after it has become known.

In this blog post we will try to give insights on how we conceived our intelligence production process to feed and orient both Threat Hunting and Detection Engineering efforts within SOC operations.

Identifying Threat Actors & extracting relevant TTPs

Planning & Direction: Identifying which Threat Actor to focus on

Identifying the Threat Actors to focus on is key to providing valuable and actionable intelligence to SOC and IR operations. With limited resources, it is quite obvious that the CTI team cannot produce in-depth analysis for all currently active Threat Actors (TA).

To orient ourselves and prioritize the different TAs, one can decide to use different free-to-use CTIPs that provide strategic CTI associated with a given TA, such as:

NB: If you have your own CTIP you will obviously base your analysis on what you already know; however it might always be useful to cross reference and analyze with multiple sources.

Using information such as victimology (targeted sectors and countries), sophistication level, activities recency, … you can here identify a sublist of interesting groups that are relevant threats to your business. You can also depend on trusted partners, such national authorities, to give you further hindsight and maybe pinpoint specific threat actors.

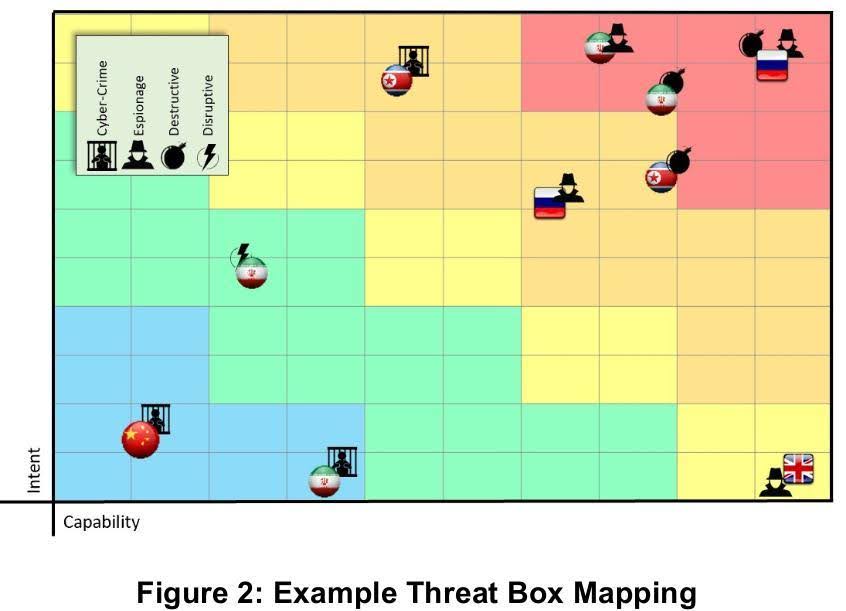

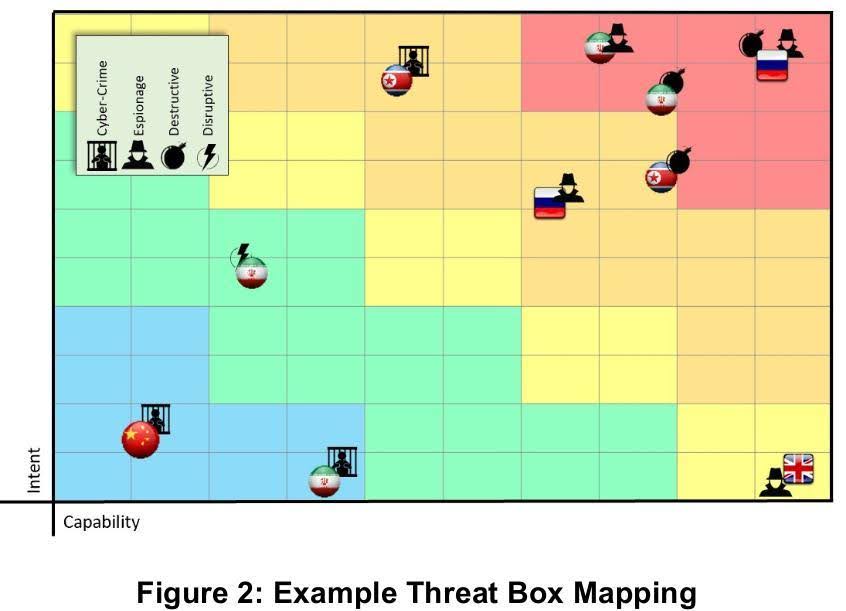

From here you might already be able to start your digging. However, should you want to do something more academic and be able to communicate your decisions, you can try and use SANS’ Threat Box model introduced by Andy Piazza back in 2020 to evaluate the relevance of each TA for the entity(ies) you wish to protect.

Now you have a clear and easy to present document backing up your decision to work on specific threats; let’s get to the librarian work : each TA’s bibliography.

Collection: TA specific bibliography

As you might have guessed, this phase is about listing all the available resources, either official publications and articles or internal IR reports, SOC detections or TLP RED shared information you have on a specific adversary.

Not much to elaborate on here, however it is important to choose and focus on a determined timeframe to build your backlog (i.e. where to stop back in time): when an adversary has been around for more than a decade, older articles might be outdated, with methodologies and attack patterns constantly evolving. It also helps in not getting overflowed by the sheer amount of information available.

For our first iteration of this process, we decided to start with a two year backlog, as we focused on widely-documented and active adversaries and wanted to develop our activities.

Processing & Analysis: TTP extraction & hunting opportunities identification

Now that you have defined and listed all resources related to your adversary to extract from, you need to dig. This TTP extraction is not a “simple” MITRE ATT&CK mapping of highlighted (or already tagged) techniques in the targeted technical articles. Instead, you need to go in-depth, understand what technique the adversary used, and how it was implemented; while asking yourself how one could hunt (i.e. query collected data) for such behavior.

This often comes with reading an article multiple times, focusing on provided resources such as technical screenshots, and pivoting to external sources to understand what is presented.

Doing so, you will be able to build a good hunting-focused TTP inventory related to your adversary; preferably in a standardized format.

Let’s take a look at a real example with Volexity’s article about Charming Kitten published in June 2023. This article provides both a clear overview of Charming Kitten’s recent operations and an in-depth analysis of their backdoor dubbed POWERSTAR. In the “Backdoor analysis” section it is mentioned that:

Interestingly, while an AES key and IV are set in the original config, Volexity observed the C2 dynamically updating the key after the initial beacon traffic. Additionally, POWERSTAR proceeds to set the IV to a random value, and then pass this to the C2 via the “Content-DPR” header of each request. In previous versions of POWERSTAR, instead of AES, a custom cipher was used to encode data during transit. The adoption of AES could be considered an improvement on the malware’s operation from previous versions.

At first glance, one might notice the change of encryption mechanism in POWERSTAR and extract this new TTP as follows:

Which is great, but is there more to extract here ? We may need to ask ourselves some additional questions.

Let’s take another look at this, the article specifies that “POWERSTAR proceeds to set the IV to a random value, and then pass this to the C2 via the “Content-DPR” header of each request.“.

→ Is this implementation specific for POWERSTAR C2 activity ?

→ What is the Content-DPR header ?

→ Is it commonly used ?

→ What is usually stored in it ?

According to Mozilla developer documentation, this header is deprecated and is commonly used to “to confirm the image device to pixel ratio in requests where the screen DPR client hint was used to select an image resource.”. Common values seem to be numbers between 0 and 100.

POWERSTAR stores AES Initial Vector values inside this header, which is a 128 bit array, thus a longer and quite abnormal value to find in this header.

→ Is it relevant for hunting or detection purposes ?

→ What data is required to have visibility on such behavior ?

→ Is this type of behavior expected to be verbose ?

The value of the content should be unusual here, which might be huntable through packet inspection or particularly verbose web traffic inspection logs. Also the documentation specifies that, in normal use of DPR, “A server must first opt in to receive the DPR header by sending the response header Accept-CH containing the directive DPR.“; one may also want to hunt for an unsolicited Content-DPR header in the aforementioned telemetry as the presence of the Accept-CH header is not mentioned in the article.

Furthermore, as this header is marked as deprecated, the simple use of it might be a flag. This hypothesis is a gamble, and therefore dangerous for operations. Some statistical analysis within production environment should be used to assess and maybe discard this.

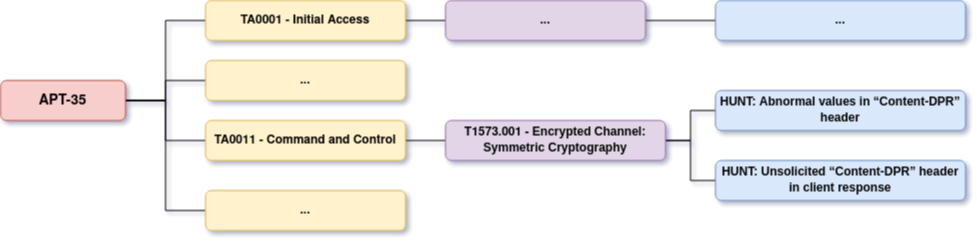

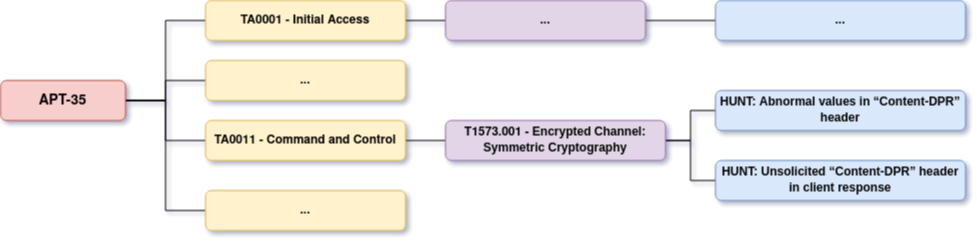

In any case, this now gives us the following TTP extraction:

| TA name |

Source |

Tactic |

Technique |

Details |

Hunting opportunities |

Required telemetry |

Comment / Warning |

| Charming Kitten |

https://www.volexity.com/blog/2023/06/28/charming-kitten-updates-powerstar-with-an-interplanetary-twist/ |

TA0011 Command and Control |

Encrypted Channel: Symmetric Cryptography |

POWERSTAR update now relies on AES cipher rather than custom cipher. |

Abnormal values in “Content-DPR” header

Unsolicited “Content-DPR” header in client response |

SSL – interception with according HTTP header extraction |

Requires specific sensors and configurations.

Possibly resource consuming hunt:’) |

This example is of course an illustration of how we tried to extract TPP and more particularly hunting / detection opportunities inside of CTI articles. Identifying hunting and detection opportunities is a complicated and subjective task; you might disagree with what we just extracted. The point was to highlight the different questions we asked ourselves and what sort of extra information we tried to extract along with “standard” TTPs.

Dissemination: mind map & Hunting use cases

This last part is about formatting and summarizing what you just produced. You can for instance create a synthetized mind map to create an actionable overview of TTPs and hunting opportunities for a specific threat actor:

You can also ingest and format this knowledge in your CTIP if you have one. But keep in mind that matching the format required by your intelligence requirements might require some adaptations and compromises.

Operational insights – from an MSSP perspective

Ahead of getting on how to consume the aforedescribed intelligence, let’s discuss some operational difficulties we faced.

From an MSSP perspective, identifying which threat actors to prioritize was easier said than done. Indeed, the variety of clients and their activity sectors, geolocation, … made it quite tough to have a reduced list of threat actors that would target our clients. We used the ThreatBox model on and cross-compared some “archetypes” of our clients, but with little success. As a result, we decided to select multiple active and already encountered threats to start with, and move on developing this activity. It is important to note that we expect the ThreatBox model to be far more relevant for internal SOC / CERT operations than in our case.

Additionally, as we did not have a proper CTIP at the time, we documented the extracted TTPs manually using Excel spreadsheets and versioning. This was not ideal, as we had some issues for collaboration using this tool, and sharing to external teams was manual and did not provide important features offered by CTIP tools such as automatic linking, querying indicators, … We expect that modeling this information within a CTIP should simplify both collaboration, sharing and usage of the produced intelligence.

Finally, it is important to mention that while we were developing this activity we did not have a clear specification of the produced intelligence requirement. We based ourselves on existing, known and documented processes, but we also chose to start by focusing on threat actors. You may want to do it the other way around, and choose as input your daily CTI watch. In other words, this was experimental and you might want to adapt this process as you see fit.

Threat Hunting & Detection Engineering efforts

From Intelligence to Hunting

Over the last years, new technologies and tools have added significant telemetry to the well a SOC team can dip into to build its operations, while at the same time facilitating access. Wider adoption of these capabilities (maybe in comparison with a Sysmon+SIEM combo) is tipping the balance. Indeed, EDRs, XDRs, NDRs, cloud security modules, SOARs, allow for more data sources being available to powerful query languages, more contextualization and more abilities to correlate events with one another to make more complex queries. At the same time, the evolution in ergonomy is making it easier for operations teams to make use of these tools. These new capabilities are for us the perfect opportunity to capitalize efficiently on the result of the Threat Intelligence.

That’s where Threat Hunting and Detection Engineering activities come in. With the numerous tools available to the SOC, it is possible to craft search queries that aim to match the extracted TTPs found by the Threat Intelligence. This collaboration process can also be iterative, with Threat Intelligence adding TTPs to the backlog and both Threat Hunting and Detection Engineering consuming that backlog.

A search query can have two main purposes for both Hunting and Detection Engineering. Most of these sensors provide the capability to schedule detection based on a search query targeting the sensor’s telemetry. Thus, any search query can, often, be either scheduled for detection or stored in a hunting queries libraries available to SOC analysts. A detection rule (provided by Detection Engineering) will be part of the SOC alerting system and needs to be accurate, generate a low quantity of false positives and have enough quality documentation so as to be qualifiable and operable 24/7. While hunting rules can have a higher quantity of results or statistical results and generally operate on a larger quantity of data. The added value of all of this is the possibility to find past compromission after a Threat Hunting session and added detection capabilities for the SOC.

When we first create a search query we do not know yet if it will become a hunting query or a detection rule. The query is first tested on a real supervised network (in the case of a MSSP, on multiple client networks). The results of the query will lead us toward a Hunting query or toward a Detection rule.

Threat Hunting

The purpose of Threat Hunting is to purposefully look back on past activities to find hints of compromission. After running queries built specifically to look up for certain TTPs, we can review the results manually and evaluate them with additional queries and other external research.

For this type of queries, it’s normal to expect some legitimate activities in the results. The Hunting work consists of verifying that those activities are indeed legitimate. A hunting query can be generic and non-specific as long as the number of results is still manageable, otherwise an aggregation of the results is possible for statistical analysis instead.

When a query cannot be made into a detection rule, we decide then to add it to our collection of hunting queries. Hunting can be done while crafting the rule. It is also possible to re-run the entire collection of queries on a regular basis, but we have found that this kind of activity is time-consuming for a low chance of results. Finally having such a collection of query available is extremely useful to investigate further when an alert is generated for a different reason or during an incident response. This is where we have found the main interest of hunting queries.

Detection Engineering

The purpose of a Detection Rule is to be used in an alerting system and initiate an investigation from the SOC. To achieve this, it is absolutely necessary to return as little legitimate behavior as possible and only hit on malicious activities.

If this isn’t already the case after creating the query, then a phase of tuning is necessary. The tuning of a Detection Rule consists of excluding every legitimate activity that the query might return and adding restrictions to make the rule specific enough to only return expected results. A good detection rule will not trigger too many alerts which are time-consuming for the SOC analysts while still detecting the expected behaviors.

This tuning work needs to be very exhaustive and we need to have a good knowledge of the supervised network to achieve a good result. In our case, as an MSSP, it means having a good knowledge of the client and their activities. Examples of tuning could be whitelisting certain softwares, account names or machines generating too many false positives and known to be legitimate.

Deployment and Collaboration

Communication within an operational environment is paramount. You don’t want to have someone running amok, deploying shady stuff on your detection systems that triggers your on-call team at 3 in the morning, without so much as an explanation note. In particular it is important to determine the format in which the rules are written (proprietary language specific to a sensor? Open Source standard? etc.) including the coding style (fields renaming, comments, investigation pivots, etc.); to keep track of deployment status on every client; to have a versioning of the rules and be able to keep track of updates. In our case, we decided to document hunting use-cases using a custom YAML format within a git project. This allowed us to automatically generate markdown documentation when released into production.

We first thought about following the TaHiTI methodology for reporting and communication. But while very interesting, it didn’t fit our particular needs (TaHiTI is primarily designed to report on a threat hunting engagement, we tried to bend it to follow our UC development cycle, but it proved too heavy for us in this context). So instead we decided to use Jira, a ticketing system for both reporting and distributing tasks. Each huntable TTP gets a ticket. Analysts can then assign the tickets to themselves and start working on a query. The query itself is shared between the different teams on a git repository. The structure of the git classifies the TTPs by their MITRE ATT&CK Tactics and accompanied by a metadata file written in yaml. Continuous integration is used to make sure of the validity of the data and to build documentation based on it. That way the repository compiles all the important information such as the version of the rules, whether it is a hunting rule or used in detection the main purpose of the rule and what to look for in case of an alert.

For external communication with our MSSP clients, we provide a release note with all new available detection rules, a highlight on some of the queries and their purpose and the status of deployment.

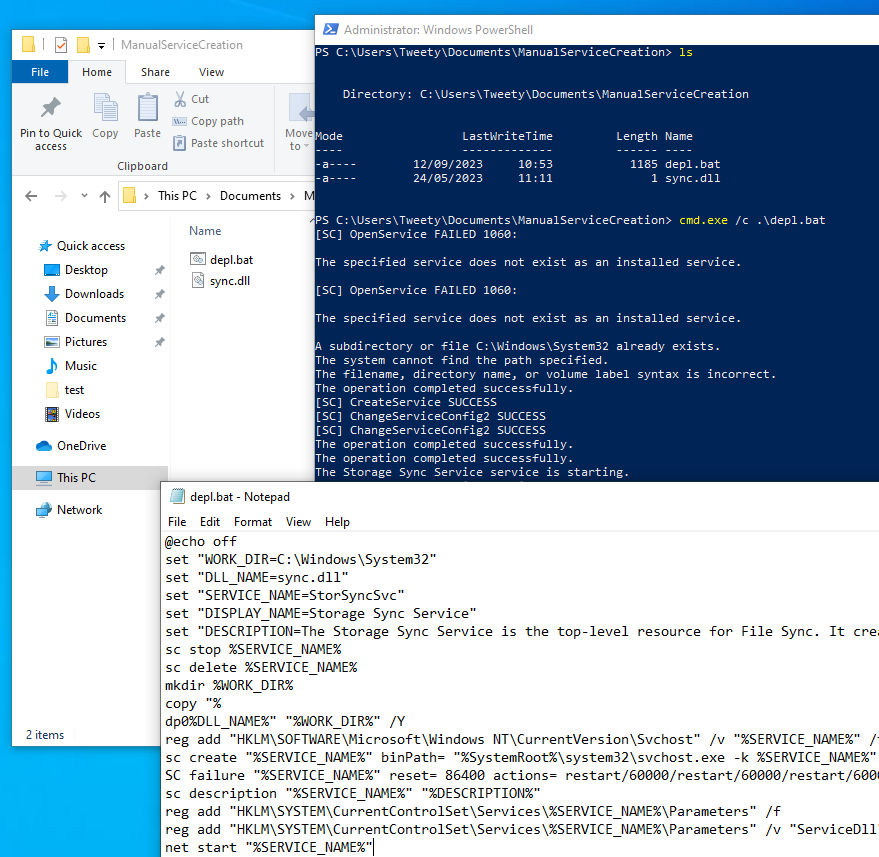

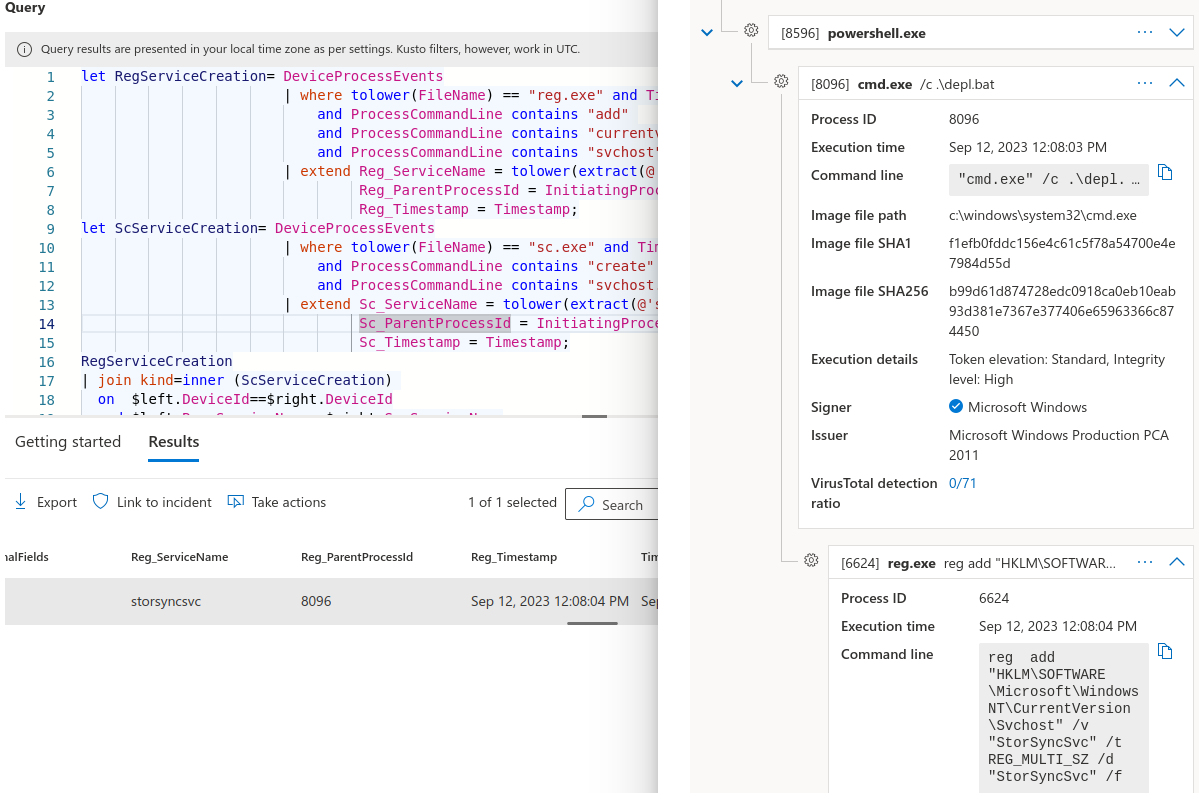

In practice

Let’s take an example. In this scenario, you are part of the detection engineering / threat hunting team. You operate mostly on Endpoint Detection & Response (EDR) sensors which provide you with extensive system telemetry and allow you to interact with monitored hosts to proactively hunt or remediate.

Your CTI team did their work and identified the following persistence script template that was used in multiple APT41 attributed campaigns:

Cybersecurity

Cybersecurity